AI can write code fast. That’s not the hard part anymore. The hard part is turning “fast code” into “correct code” without a human babysitting every step.

A closed loop is simple: change → verify → learn → change again, until it converges. No vibes. Just feedback.

Why it matters

AI is great at generating plausible code. Plausible code passes reviews. Plausible code also ships bugs.

A closed loop forces reality into the conversation:

- tests fail → the agent deals with it

- UI shifts → the snapshot diff is the truth

- CI complains → fix it or it doesn’t merge

That’s how AI coding becomes reliable: the loop becomes the reviewer.

Less human over time

Humans still set direction and acceptance criteria. But the day-to-day loop (edit, run, fix, repeat) should get increasingly automated. Humans step in when:

- requirements are unclear

- tradeoffs need judgement

- something genuinely new shows up

Real examples

This is what I’m in my apps, and it’s the pattern that keeps working.

Tests

- Agent changes code

- Run unit + UI tests

- Feed failures back to the agent

- Agent fixes and retries

- Repeat until green

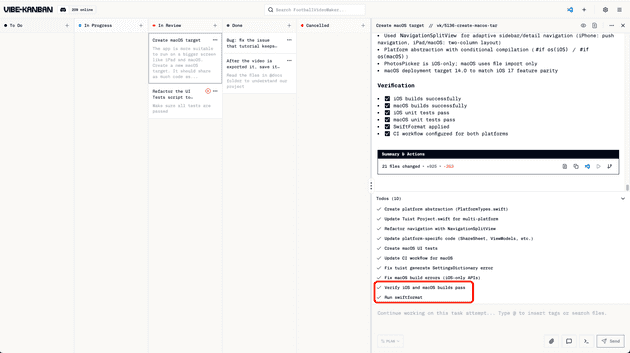

You should never use AI coding agent without verifying its output. Unit Tests are required. Adding more tests like UI Tests, Snapshot Tests are desired.

In my app, after every task, AI agent must run all tests, reading the output and verify that all tests are passed before conclude the task

CI loop

Local tests are nice. CI is the gate.

Using Conductor and VibeKanban, we’re closing the loop across GitHub:

- modify code

- open a PR

- read GitHub Actions output (build, lint, tests)

- fix issues

- push again

If the agent can’t survive CI, it’s not shipping broken code, it’s generating drafts for human to review.

Conductor has a nice feature to fetch logs from Github Action and feed it back to AI Agents. It makes it very easy for Claude Code and co to fix Github Action configurations and stay in the loop.

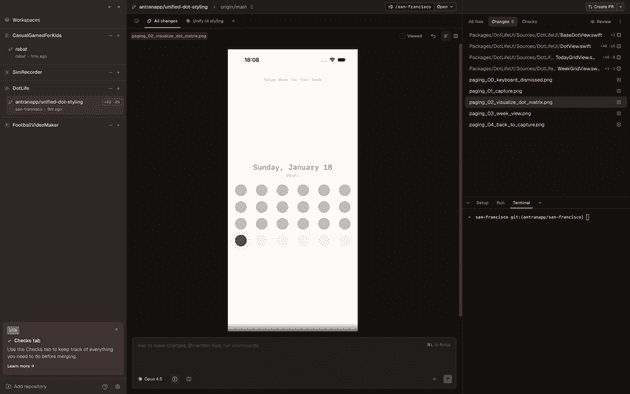

UI snapshots

UI is where “looks fine” turns into regressions.

Snapshot tests make UI work measurable:

- change UI code

- run snapshots

- diff shows what actually changed

- adjust until it matches intent

It turns UI into the same loop as logic: change → verify → diff → fix.

When working on my app, I often ask AI to generate UI tests, and on every task, AI agent will run the UI Test, take the snapshots and verify the UI changes. It’s also easy for me to verify the UI changes without running the app manually

The shift

Using AI for coding is inevitable. The shift is obvious. We need to shift our mindset how to produce good code as well. And one method to control the quality is to let AI fixing itself using a closed feedback loop.